We don’t get our news from the radio, newspapers, or even cable television anymore. Social media is the undisputed king of communicating news across the world. However, it is also the main avenue for misinformation. From world leaders to corporations and celebrities, everybody uses social media to talk to the world. Twitter, Facebook, TikTok, and Instagram have become central hubs for information dissemination.

While these platforms offer incredible opportunities for connectivity and sharing, they also present significant challenges in combating misinformation. The internet was supposed to be decentralized, but the lack of regulation has become a major problem.

This article explores the complexities of addressing misinformation, arguing both sides of the debate. We acknowledge the real and harmful effects of misinformation while also considering the importance of preserving the internet’s spirit.

The Reality of Misinformation

Misinformation, or false information spread unintentionally, is rampant across social media platforms. From viral hoaxes to manipulated images and misleading headlines, misinformation can quickly gain traction, leading to widespread confusion and distrust. The consequences of misinformation can be dire. They go from public health crises fueled by false medical advice to political unrest incited by fabricated stories.

Legal disputes and cases of slander underscore the gravity of misinformation’s impact. High-profile incidents such as the defamation lawsuit against Elon Musk by a British cave explorer, Vernon Unsworth, highlight how fake news on Twitter can have real-world repercussions. Musk’s baseless accusations against Unsworth tarnished the explorer’s reputation and resulted in a costly legal battle. It emphasized the need for accountability in online trash talk that can have real-life consequences.

Defamation cases have been on the rise due to the increasing complexity of misinformation on social media. Individuals and businesses have found themselves targeted by false claims and malicious rumors, leading to damaged reputations and financial losses. For example, in 2019, actor James Woods sued a Twitter user for $10 million over a tweet falsely accusing him of being a cocaine addict.

While Woods eventually dropped the lawsuit, things have only gotten worse since the incident. To the credit of these platforms, they spend hundreds of millions on preserving their community guidelines. The problem is, we’ve just gotten very good at making our fake news seem real.

Artificial Intelligence and Deep Fakes

Artificial intelligence (AI) has significantly complicated the issue of misinformation on social media platforms. AI algorithms, designed to optimize user engagement and content relevance, can inadvertently amplify misinformation by promoting sensationalized or polarizing content. They analyze user behavior and preferences to tailor content recommendations, often leading to the proliferation of misleading or false information that aligns with users’ biases. This creates a bubble of fake news that completely radicalizes an individual.

Furthermore, AI-powered deepfake technology is an even more formidable challenge. Deepfakes use AI to create convincingly realistic but fabricated audio and video content. The recreations are convincing; they easily deceive viewers by manipulating speeches, interviews, and other media. This erodes trust in visual evidence and enables malicious actors to disseminate false narratives with unprecedented authenticity.

As AI continues to advance, addressing the complexities of misinformation requires innovative solutions that leverage technology while safeguarding against its misuse. Efforts to develop AI-based detection tools, enhance media literacy education, and promote ethical content moderation practices are crucial steps in mitigating the harm our advancement is causing.

The Cambridge Analytica Scandal and the Trump Elections

The Cambridge Analytica scandal was the biggest wake-up to how misinformation can mess with people’s minds during elections since the Snowden fiasco. Imagine finding out that a consulting company snagged data from millions of Facebook users without consent! They did it to send out targeted political ads, playing on people’s likes and dislikes without them even knowing. It’s like they had this sneaky playbook to manipulate voters, knowing just what buttons to push.

You can make a very strong case for the argument that the 2016 elections would be nowhere near as close as they were if Cambridge Analytica hadn’t done the marketing for one side. They used all that personal information to send out customized messages to voters, trying to tilt the scales in their favor. It got folks seriously riled up, demanding changes to prevent this kind of digital manipulation from happening again.

It’s not just the U.S.; similar scandals have rocked other parts of the world, like India during the Modi elections. There were allegations of social media manipulation and fake news spreading like wildfire, and many social media cell workers came out with their stories years later. These scandals highlight the urgent need for tighter regulations and better safeguards to protect the integrity of democratic processes everywhere. Keep in mind that this was all seven to eight years ago. Companies have gotten much better at manipulating data on social media since then.

Protection from Misinformation

Here are a few ways social media platforms are attempting to protect from misinformation.

Safeguards on Social Media Platforms

Social media platforms have recognized the urgent need to combat misinformation and have implemented various safeguards to address this issue. X, formerly known as Twitter, for instance, has introduced labels and warnings on tweets containing misleading information. It aims to provide users with additional context and direct them to more credible sources.

Similarly, Facebook has partnered with fact-checking organizations to flag and reduce the visibility of false content on its platform, while also giving users the option to report misleading posts. TikTok has implemented a “Fact Check” tool, prompting users to verify information before sharing it, thereby encouraging responsible sharing practices. Additionally, Instagram has taken steps to reduce the spread of misinformation by limiting the visibility of false content and offering educational resources on media literacy.

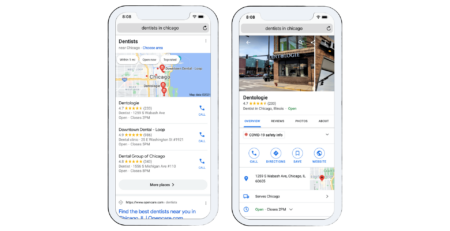

However, misinformation on TikTok may be the worst to deal with. The platform’s format, which revolves around short-form videos, presents a unique challenge because of how little time people spend watching something. While TikTok has implemented measures to combat misinformation, such as the “Fact Check” tool, which prompts users to verify information before sharing, the platform still faces significant hurdles in effectively addressing the spread of false or misleading content.

Due to the fast-paced nature of TikTok’s content, misinformation gains quick traction before it can be fact-checked or moderated. Additionally, the platform’s algorithm-driven content discovery can inadvertently amplify sensationalized or misleading videos, further perpetuating misinformation. TikTok has taken steps to mitigate these challenges by increasing transparency around its content moderation practices and providing users with resources to report false information. However, the platform’s security is riddled with holes.

Challenges and Recommended Improvements

Despite these efforts, effectively combating misinformation on social media platforms is still tough. The sheer volume of content being shared makes it difficult for platforms to monitor and moderate it effectively. Moreover, the rapid dissemination of misinformation can outpace fact-checking efforts, allowing false information to spread unchecked before it can be addressed.

Our current fact-checking systems are very far from perfect. It’s common for users to get a sensitivity blocker on Instagram for random posts while videos of fatal traffic accidents go uncensored. Algorithms designed to prioritize engaging content may inadvertently amplify sensationalized or misleading information. That means the news spreads even faster, and it’s because of the way we’ve designed our platforms.

To address these challenges, social media platforms should invest in improving their content moderation technologies and increasing transparency in their algorithms. Enhanced collaboration with fact-checking organizations and academic institutions can bolster efforts to identify and address misinformation effectively. Furthermore, platforms should provide users with more robust tools and resources to verify information independently, empowering them to make informed decisions about the content they encounter online.

Self-Protection Measures

In addition to relying on platform safeguards, individuals can take proactive steps to protect themselves. Cultivating critical thinking skills is essential to discerning reliable information from falsehoods. By verifying sources, cross-referencing information, and being cautious of sensationalized content, individuals can reduce their susceptibility to misinformation. Media literacy education plays a crucial role in equipping individuals with the necessary skills to navigate the digital landscape responsibly. Educational initiatives aimed at teaching users how to critically evaluate information and identify misinformation are essential in empowering individuals to become discerning consumers of online content.

Furthermore, users can actively contribute to combating misinformation by reporting false or misleading content to platform moderators. Flagging suspicious content and having constructive discussions on the importance of accuracy and integrity in information sharing are the best ways individuals can play a role in creating a healthier online environment. Ultimately, a combination of platform safeguards, improved technologies, and individual responsibility is necessary to address the complex challenge of misinformation on social media effectively.

Platform-Specific Challenges

Each social media platform faces unique challenges in combating misinformation. Twitter, known for its real-time nature and character limit, struggles to monitor and moderate content effectively. The platform has faced criticism for its inconsistent enforcement of policies and the proliferation of misinformation during breaking news events.

Facebook, as one of the largest social media platforms, grapples with the spread of misinformation through its vast user base and algorithmic recommendation systems. The platform’s role in facilitating the dissemination of false information during elections and public health crises has sparked calls for stricter regulation and accountability measures.

TikTok, with its predominantly young user demographic and algorithm-driven content discovery, faces challenges in curbing the spread of misinformation and harmful content. The platform has been criticized for its lax content moderation policies and susceptibility to viral misinformation campaigns.

Instagram, popular for its visual-centric content and influencer culture, confronts issues related to image manipulation and deceptive advertising. The platform’s emphasis on aspirational lifestyles and curated content can contribute to the spread of unrealistic or misleading information.

Conclusion

Navigating misinformation on social media platforms requires a multifaceted approach that acknowledges both its harmful impact and the available safeguards for protection. It’s important to remember the gravity of the situation. We often let misinformation slide because we think they’re trolls. However, you’d be surprised at how seriously people take things they read on the internet.

Fake news has real-world consequences. You need to protect yourself with proactive measures such as fact-checking and critical thinking, and platform regulations offer avenues for combating its spread. By remaining vigilant and discerning consumers of information, individuals can contribute to a healthier online ecosystem where truth prevails over falsehoods. Ultimately, fostering a culture of accountability and responsibility among users and platform providers is essential to creating a safe virtual environment.