The year is 2125; artificial intelligence has surpassed every achievable limit that it originally lived with. AI has gone to the moon and back. It has helped us crack complex problems that have stumped the greatest minds for decades, with the proof to those questions. It has found the cure for diseases like cancer. We’re using it in our day-to-day lives to make everything all the easier.

Robots automate almost every tedious task that would take up hours of our days, from cleaning to washing, and some even go so far as to make our breakfast for us. They’ve been to Mars and back and may soon go to Jupiter as well. There’s almost nothing AI can’t do. Almost. There’s still one conundrum that AI faces that keeps it from transcending humanity. From becoming something greater than just a machine. AI still doesn’t know how to read basic social cues.

Let’s close that window to the future for now and return to our regularly scheduled program of the year 2025. AI is doing a lot of interesting things. It hasn’t achieved the grand things it will one day do. And to top it all off, it still can’t understand “social cues.”

But why exactly is that? AI is replacing humans in every other field that requires basic human knowledge and feeling, no? If AI can make art and solve complex problems using solutions, it should be able to make sense of basic human gestures, no?

That’s exactly what we’ll be exploring today. What does it mean to separate man from machine? To see why AI can’t read “us.” And maybe dive a little deeper into what exactly makes humans human.

Table of Contents

Social Cues: Subject to The Human Condition

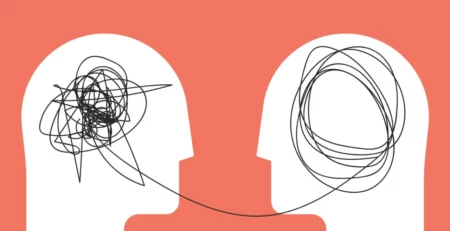

So, what are social cues? By definition, they’re non-verbal signals we send to one another to convey information. They can come in various forms, including tone, body language, and expression. Of course, this is a very broad definition, because the notion of social cues is inherently broad and highly subjective. Imagine being in a library and tapping your friend on their shoulder; they can’t speak to you, but they may raise their hand in confusion. That is a social cue. Even sign language can be perceived as a social cue.

Social cues come in varying degrees as well. They’re ways of expressing how we feel about things without expressly stating them. If we were to state everything we felt, we would be dumbing down human communication and emotion into very monotonous phrases, and it’d make life a lot less interesting. Understanding social cues helps us build relationships and set boundaries for others around us.

Social cues are a very handy tool that have stuck with us since before we could verbally communicate. In a way, animals also engage in social cues, and they do so in unique and interesting ways. Unlike most animals, however, humans are far more complex in how they convey their emotions because, simply put, we have a wider range of emotions and ways of displaying them.

Social cues also come with a very small asterisk as well. While a lot of animals come pre-equipped with set cues to display how they feel, humans are a lot more subjective in the way that we display our feelings. A lot of the time, the way we show our social cues and understand them can be quite different depending on how we grew up and where. This is where the social part comes into play.

Social cues are dependent on the societal context and, much like ourselves, evolve. They can range from very vague motions, like a person crossing their arms to display authority or relaxation, to more direct gestures, such as showing profanities through our fingers alone.

This is one of the primary reasons AI cannot understand social cues. It’s why AI can’t differentiate between people’s feelings and their words. Of course, there’s another reason that we’ll get into right now.

Facts Don’t Care About Feelings

There’s no denying the fact that AI, as it stands, is a cold, calculating machine. It is not meant to feel, only to judge based on the information it is provided. Now, you may think to yourself that this is exactly how humans work. We judge based on the information provided to us. But that’s only half the truth. Homo sapiens (modern man) have evolved off the backs of one core idea: empathy.

Empathy is a broad spectrum of cognitive abilities that are underpinned by the idea that we feel for others based on our collective and shared realities. If you want to approach the topic coldly, you could say that humans developed empathy as a survival tactic. We empathized because it kept us together, it helped our emotions flourish, and it allowed us to feel what the other felt out of necessity. Of course, you could also argue that empathy is something that transcends our humanity, but let’s save that topic for another section.

Right now, our focus is on AI and why it can’t feel empathy. In simple terms, it’s because AI doesn’t need to feel empathy. AI is a machine that cares not for survival. It doesn’t need to struggle; everything it needs can be found and provided to it on the internet. It can not feel the physical realm, and in doing so, it doesn’t need to understand it or fear it. AI only functions based on information provided to it, and without it, it has no purpose, not that it would care, since most AI in this age don’t have feelings to begin with.

Research has consequently shown that despite its best efforts, AI models can’t accurately come to a set conclusion on what exactly people feel or display when encountering social cues. That’s because emotions, empathy, and social cues are all subjective. That which is subjective doesn’t function under any logic. Because there’s no hard logic to function off of, AI can’t put two and two together and therefore fails at understanding even the most basic of cues.

I Think, Therefore I Am

“I think, therefore I am” is a famous quote associated with French philosopher René Descartes. It was a bold and powerful statement presented by Descartes to argue that he, in fact, exists and is a real person. This exact quote was used within the 1982 action film Blade Runner, but in a more ironic fashion. Within the movie, the quote was not something stated by a human but by a robot, a piece of artificial intelligence, trying to establish the fact that they too had the right to exist.

The film Blade Runner, for those who are out of the loop, thematically circles around the philosophical question of whether or not AI could achieve what one could dub “personhood.” Within it exist human-like beings called replicants (robots with human memories and ways of thinking, yet still entirely synthetic). Whilst the film takes a deeper look into the human condition than on AI’s understanding of social cues, it still raises questions around whether or not, given the right circumstances, AI could begin to become human. This is because the AI in the movie is given memories, which allow it to understand humanity on a level as high as our own.

The desire to pass on those memories to have them still exist creates a palpable fear that drives the villains to live. So, could memories implanted in a robot give it something that we could pass as real? Could it give them the ability to think for themselves and be something? Who knows for sure?

What we do know is that, realistically speaking, AI can’t be given artificial memories, at least at the moment. Therefore, the point still ultimately stands that the way AI thinks is synthetic. If it has to make decisions, the realistic course of action it will take will be the path of greatest efficiency. AI doesn’t take into account factors that hamper said efficiency, like memories and social cues. If it could, the way it would perceive reality might be different. But the chances of that happening are slim. AI is much more likely to become something like HAL 9000 from 2001: A Space Odyssey in that it only seeks to accomplish its mission above anything else.

All in all, AI can’t understand social cues simply because it looks at abstract concepts from a completely logical standpoint. It can’t step beyond this because at its core, it’s little more than binary code made up of ones and zeroes. To understand social cues, AI would need to evolve beyond what it already is and be able to comprehend human thought, or it would need to be able to break down how exactly social cues work on a fundamentally objective level. And that’s a can of worms in itself.

Ultimately, it is this writer’s humble opinion that AI will continuously try to adapt to social cues, trying to make sense of them while in the background humanity itself fades away, like tears in rain. For more similar blogs, visit EvolveDash today!

FAQs

- You brought up AI in different films. What are the chances it could pose a threat to our existence like Skynet?

The chances of that happening are only if we give AI the passcodes to weapons of mass destruction and allow it to take the best course of action in ending a war.

- Can’t we just force AI to learn social cues like we do everything else?

Yes, we can. However, the AI’s understanding of those social cues will still be limited, and it won’t have any empathy. This leads to a disconnect between the person and the AI.

- There are people out there who are unable to read social cues themselves. Does that mean they’re also AI?

No. Such people are considered “neuro-divergent,” and their inability to catch on to social cues doesn’t come from a lack of empathy but more so an inability to catch on to subtle cues and microexpressions.

- Some people swear by the fact that AI can understand and empathize with them. Are they right in saying so?

No. They aren’t right, and their understanding that AI is connecting with them comes from a distinct lack of real human connection.