The lines between humans and machines are blurring on social media. Once a tool for automation, bots have evolved into sophisticated agents capable of manipulating public discourse, defrauding users, and eroding trust in online platforms.

This article explores the different types of social media bots, their impact, and the methods used to detect and prevent them.

Table of Contents

What are Social Media Bots?

Social media bots are automated programs designed to perform specific tasks on social media platforms. These tasks can range from simple actions like liking posts to more complex ones, such as spreading information. Bots can operate on various social media platforms, including X, Facebook, Instagram, and others.

Legitimate Bots

Legitimate bots are designed to enhance user experience and provide valuable services. Customer service bots assist users by answering common questions and providing support. For instance, a customer service bot on a retail site might help users track their orders or return products.

News bots automatically share news updates, weather reports, and other relevant information to keep users informed. Content aggregation bots collect and share content based on user interests, making it easier for users to find relevant information.

Malicious Bots

Malicious bots, on the other hand, are designed with harmful intent. These bots can undermine the integrity of social media platforms and have negative consequences for their users.

Spam bots flood social media with unsolicited messages, links, and advertisements. These often lead to phishing sites or scams. Fake follower bots artificially inflate follower counts, making accounts appear more popular than they really are. This can deceive users and skew perceptions.

Impersonation bots mimic real users, often to deceive others, steal personal information, or spread malware.

The Bot Problem

The use of bots on social media has been increasing rapidly. According to recent studies, bot activity accounts for a significant portion of overall social media traffic. A 2023 study by Imperva found that bad bot traffic increased to 30.2% of all internet traffic, up from previous years.

The growing prevalence of bots on social media is significant for several reasons. Malicious bots can erode trust in social media platforms. When users encounter spam, fake followers, or misinformation, their trust in the platform and its content can diminish.

Bots can disproportionately influence public opinion by amplifying certain voices or spreading misinformation. This can have serious implications during elections, public debates, and other critical events.

Businesses can suffer financially from bot activities. For example, fake follower bots can lead companies to invest in influencers based on inflated metrics, resulting in wasted marketing budgets. Spam bots can drive users away from platforms, reducing ad revenues.

Bots can also be used for various cyberattacks, including data breaches and the spread of malware. This poses significant security risks to users and platforms alike.

Early Uses of Bots

When social media platforms first emerged, the use of bots was relatively benign and primarily focused on enhancing user experience and automating repetitive tasks. Early bots were designed to help users and businesses schedule posts in advance.

This allowed for consistent content distribution without the need for a human to constantly intervene. Tools like Hootsuite and Buffer automate this process. They enabled users to plan their social media activity more effectively.

Bots were employed to aggregate content from various sources and share it with users based on their interests. For example, a bot could collect news articles about technology and post them on a tech enthusiast’s feed, keeping users informed without requiring them to search for content themselves.

Customer service bots were integrated into social media platforms to assist with customer service. These bots could respond to common queries, provide basic information, and guide users through simple troubleshooting processes. This automation helped businesses offer 24/7 support, improving customer satisfaction.

Bots were also used to enhance user engagement by liking posts, following accounts, and even commenting on content. These actions helped users grow their networks and increase interaction on platforms. For instance, a bot could like a user’s posts based on specific hashtags.

These early uses of bots were generally well-received and contributed positively to the social media ecosystem. They made social media management easier, provided timely information, and improved customer interactions.

Shift to Malicious Uses

As social media platforms grew in popularity and influence, the nature of bot usage began to change. The potential to reach large audiences quickly attracted individuals and organizations with malicious intentions. Several factors contributed to the shift towards the negative use of bots:

Financial Incentives

The commercial aspect of social media became a significant driver for malicious bot activity. Bots could generate fake likes, followers, and comments, creating the illusion of popularity and influence. This false engagement could be sold to individuals and businesses looking to boost their social media presence. The demand for quick and easy growth led to a proliferation of bots designed to game the system.

Political Manipulation

Social media became a critical platform for political discourse, and bots were soon deployed to manipulate public opinion. During elections and political campaigns, bots could be used to spread propaganda, amplify divisive content, and create the appearance of widespread support or opposition. High-profile instances include the 2016 U.S. presidential election, where bots were used to spread misinformation and influence voter behavior.

Misinformation and Fake News

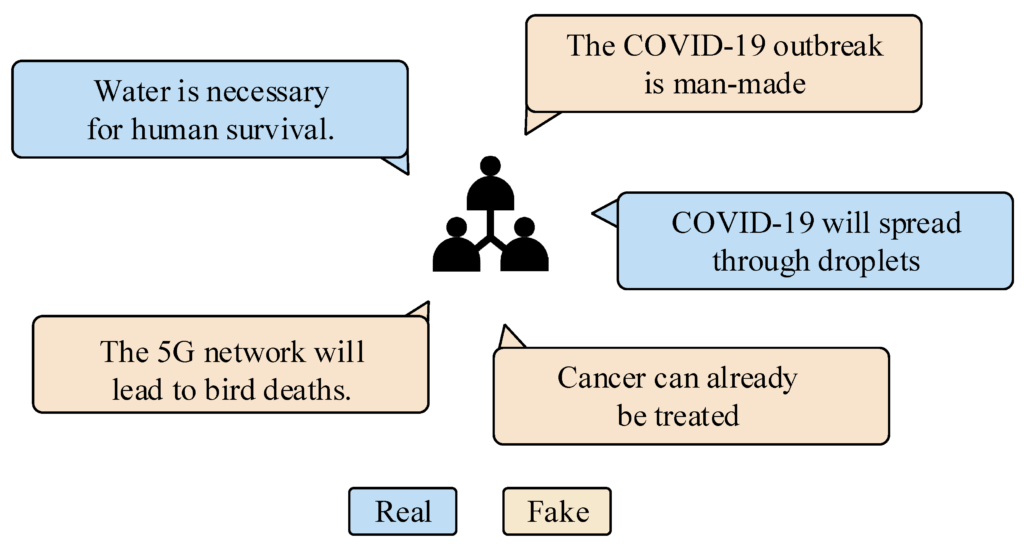

The ability of bots to spread information rapidly and widely made them ideal tools for disseminating misinformation and fake news. Bots could post and share false information repeatedly, making it appear credible and widely accepted. This tactic has been used to sow confusion, undermine trust in legitimate news sources, and influence public perception on various issues.

Spam and Scams

As social media platforms grew, they became prime targets for spam and scams. Bots were programmed to flood users with unsolicited messages, advertisements, and links to malicious websites. These spam bots are aimed at tricking users into clicking on harmful links, providing personal information, or purchasing fraudulent products.

Cyber Attacks

Bots have also been used in more direct forms of cyber attacks. For example, botnets (networks of bots) can launch coordinated attacks to overwhelm systems, steal data, or spread malware. These attacks can cause significant damage to individuals, businesses, and even national infrastructure.

The shift to malicious uses of social media bots has created numerous challenges for platform operators, regulators, and users. The once-beneficial tools have become vectors for harm.

Types of Social Media Bots

There are different types of social media bots. Each type of bot poses unique challenges and risks. These are:

Spam Bots

Spam bots are among the most common types of social media bots. Their primary purpose is to flood platforms with unsolicited and often irrelevant content. These bots are typically used to promote products, services, or websites. But they can also be involved in more malicious activities, like phishing and spreading malware.

Spam bots are designed to post a large number of messages quickly. They can create hundreds or thousands of posts in a short period of time. The messages sent by spam bots are often repetitive and identical or very similar to each other. These bots perform automated actions such as posting comments, sending direct messages, and tagging users.

They frequently post promotional content, often including links to external websites. These links can lead to legitimate sites, but they often direct users to scams or phishing pages. They leave comments on popular posts to gain visibility. These comments can be anything from advertisements to generic statements designed to draw attention to their profiles.

Spam bots can send direct messages to users, often containing promotional material or malicious links. They use trending hashtags to insert their content into popular conversations, increasing the likelihood that users will see their posts.

Fake Follower Bots

Fake follower bots are used to artificially inflate the follower counts of social media accounts. This type of bot plays a significant role in creating the illusion of popularity and influence.

They follow a large number of accounts in a short amount of time. These bots rarely engage in meaningful interactions with the accounts they follow. They might like posts occasionally, but they seldom comment or share content. They often have incomplete profiles with few, if any, posts. Their profile pictures and bios are typically generic or entirely absent.

By following specific accounts, fake follower bots increase the number of followers these accounts appear to have. This artificial boost can make an account look more popular than it truly is.

High follower counts can influence how real users perceive an account. People are more likely to trust and follow accounts that seem popular. Influencers and businesses may use fake follower bots to attract real followers and potential customers.

Brands and companies often pay influencers based on their follower counts and engagement rates. Fake followers can lead to misleading metrics, causing companies to invest in influencers who may not have genuine reach or influence.

Misinformation and Propaganda Bots

Misinformation and propaganda bots are designed to spread false information and manipulate public opinion. These bots can have significant social and political impacts by amplifying false narratives and sowing discord.

These bots often post content that is politically or socially charged, designed to provoke strong reactions. They often work in networks, where multiple bots share and amplify each other’s posts. This creates the illusion of widespread support or opposition.

Misinformation bots can quickly disseminate information across platforms. This makes it difficult to control the spread of false narratives.

By reposting and sharing false information repeatedly, these bots can make a piece of fake news appear more credible and widely accepted. They can interact with real users by commenting and replying to posts, pushing false narratives into real conversations.

During elections and political events, misinformation bots can be used to spread propaganda, support specific candidates, or undermine opponents. High-profile examples include efforts to influence elections in the U.S., Europe, and other regions.

Public Confusion: The rapid and widespread dissemination of false information can confuse the public and make it difficult for individuals to discern truth from fiction.

Impersonation Bots

Impersonation bots are designed to mimic real users, often to deceive others and gain their trust. These bots can be particularly dangerous, as they can trick individuals into sharing personal information, spreading misinformation, or engaging in financial transactions.

These bots often have profiles that look very similar to those of real users, complete with profile pictures, bios, and posts that mimic typical user behavior. They engage in conversations and interactions in ways that resemble those of real users.

This makes it difficult to distinguish them from genuine accounts. Some impersonation bots steal the identities of real people, using their photos and personal information to create fake accounts.

By mimicking real users, these bots can gain the trust of other users. This can lead to the sharing of personal information, which can then be exploited. They can spread false information under the guise of a trusted source.

These bots can be used to conduct financial scams by pretending to be someone the victim knows. They might ask for money, personal information, or access to accounts.

Detection Methods

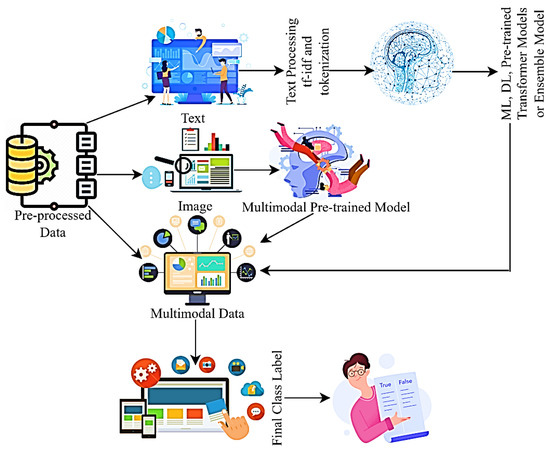

Social media platforms employ a variety of technologies and methods to detect bots and prevent their harmful activities. These methods combine technical sophistication with human oversight to identify and address the presence of bots.

Machine Learning Algorithms

Platforms use machine learning algorithms to analyze user behavior patterns. Bots often exhibit repetitive and automated behaviors, such as posting at regular intervals or following large numbers of accounts in a short time. By identifying these patterns, platforms can flag and investigate suspicious accounts.

Algorithms can also analyze the content posted by users. Bots might share identical or very similar posts across multiple accounts. Natural language processing (NLP) techniques help in detecting these anomalies in text, identifying bot-generated content based on linguistic patterns and frequency.

CAPTCHAs and Turing Tests

Completely Automated Public Turing Test to tell Computers and Humans Apart (CAPTCHA) challenges are designed to verify whether a user is human. These tests often involve recognizing distorted text, selecting images based on a prompt, or completing other tasks that are easy for humans but difficult for bots.

Behavioral CAPTCHAs are more subtle and involve monitoring user behavior, such as mouse movements and typing patterns, which are hard for bots to mimic accurately.

IP Address Monitoring

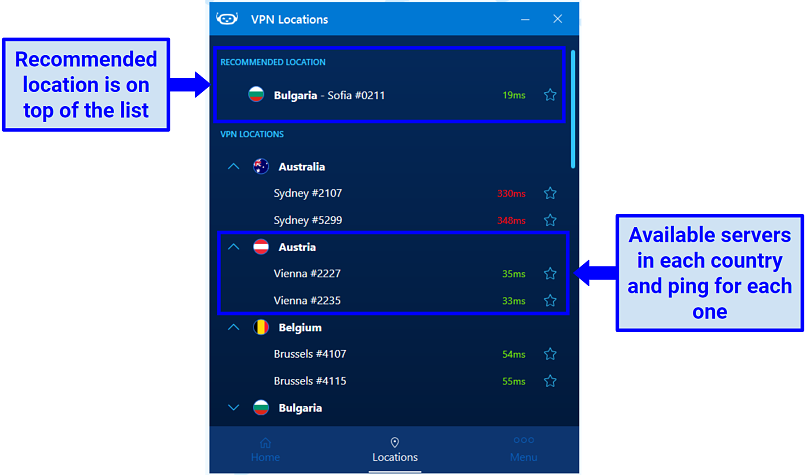

Platforms track the IP addresses of known bots and block them from accessing the site. If multiple accounts are created or operated from the same IP address, it raises a red flag.

They can identify anomalies by checking if an account claims to be from one location but frequently logs in from another, suggesting the use of VPNs or proxy servers often used by bots.

Human Moderation and Reporting

Social media platforms encourage users to report suspicious activities. Human moderators review these reports and take appropriate action against the identified bots.

In addition to automated systems, human moderators conduct manual reviews of flagged accounts to verify bot activity, especially in complex cases where automation might fall short.

Prevention Strategies

To combat the bot problem, social media platforms and users can adopt proactive measures that reduce the impact and prevalence of bots.

Enhanced Verification Processes

Implementing 2FA adds an extra layer of security by requiring users to verify their identity through a second method. This makes it harder for bots to create and control accounts.

Requiring new accounts to verify their email addresses and phone numbers can reduce the ease with which bots are created.

Stricter Account Creation Policies

Platforms can impose limits on how many accounts can be created from a single IP address within a specific timeframe, reducing the mass creation of bot accounts.

New accounts can be restricted in terms of the number of posts, likes, or follows they can perform within their initial days or weeks, making it harder for bots to operate effectively from the start.

User Education and Awareness

Platforms can run campaigns to educate users about the signs of bot activity and the importance of reporting suspicious accounts. Providing users with tools to easily report and block suspected bots can enhance community-driven efforts to combat bots.

Battle Against Bots

The bot problem in social media is a complex and evolving challenge that impacts trust, security, and user experience. Current detection methods face significant challenges, requiring continuous innovation and user vigilance.

Proactive measures, enhanced verification processes, and collaborative efforts between platforms and users are essential in mitigating the harmful effects of bots. Addressing this issue is crucial for maintaining the integrity and reliability of social media as a space for genuine interaction and information sharing.

Discover how EvolveDash can help protect your brand from social media bots and enhance your online presence today!

FAQs

1. Can bots be used for legitimate purposes?

Yes, some bots are designed for legitimate purposes like customer service, sharing news updates, and aggregating content based on user interests. These bots enhance the user experience and streamline tasks on social media.

2. How can I tell if a social media account is a bot?

Signs of a bot account include an unusually high number of followers or posts in a short amount of time, a lack of meaningful engagement, and generic or incomplete profile information. These accounts often don’t interact authentically with others.

3. What can users do to protect themselves from bot-driven scams?

Users should avoid clicking on suspicious links, verify the legitimacy of accounts and offers, and report any bot activity. It’s also important to use strong passwords and enable two-factor authentication (2FA) for added security.